Reviewed by Corey Noles

Google's Gemini has been having what you might call a "moment"—and not the good kind. Between generating historically inaccurate images and getting stuck in response loops, Google is now promising a fix for what users are calling Gemini's "shame spiral." Here's what you need to know about the tech giant's latest attempt to get its AI back on track.

The issues aren't just cosmetic quirks—they're fundamental problems that affect how Gemini handles everything from simple queries to complex reasoning tasks. The image generation controversy that made headlines earlier this year revealed deeper architectural problems with how the model processes uncertainty and responds to user requests. Google admits that "everything worked fine before the release of Gemini 2.0, but stopped working afterward," with users reporting that the AI refuses to answer questions, generates incorrect data, and becomes "significantly slower, more laggy, and choppier than before." This degradation matters because it directly impacts AI adoption—when users can't trust basic functionality, they abandon the platform entirely.

The shame spiral isn't just about image generation

While Gemini's image generation controversy grabbed headlines earlier this year—where it depicted historical figures like the Founding Fathers as people of color—the current problems run much deeper into the model's core reasoning architecture. What started as overcorrection in visual representation has cascaded into systematic reliability problems across all interaction types.

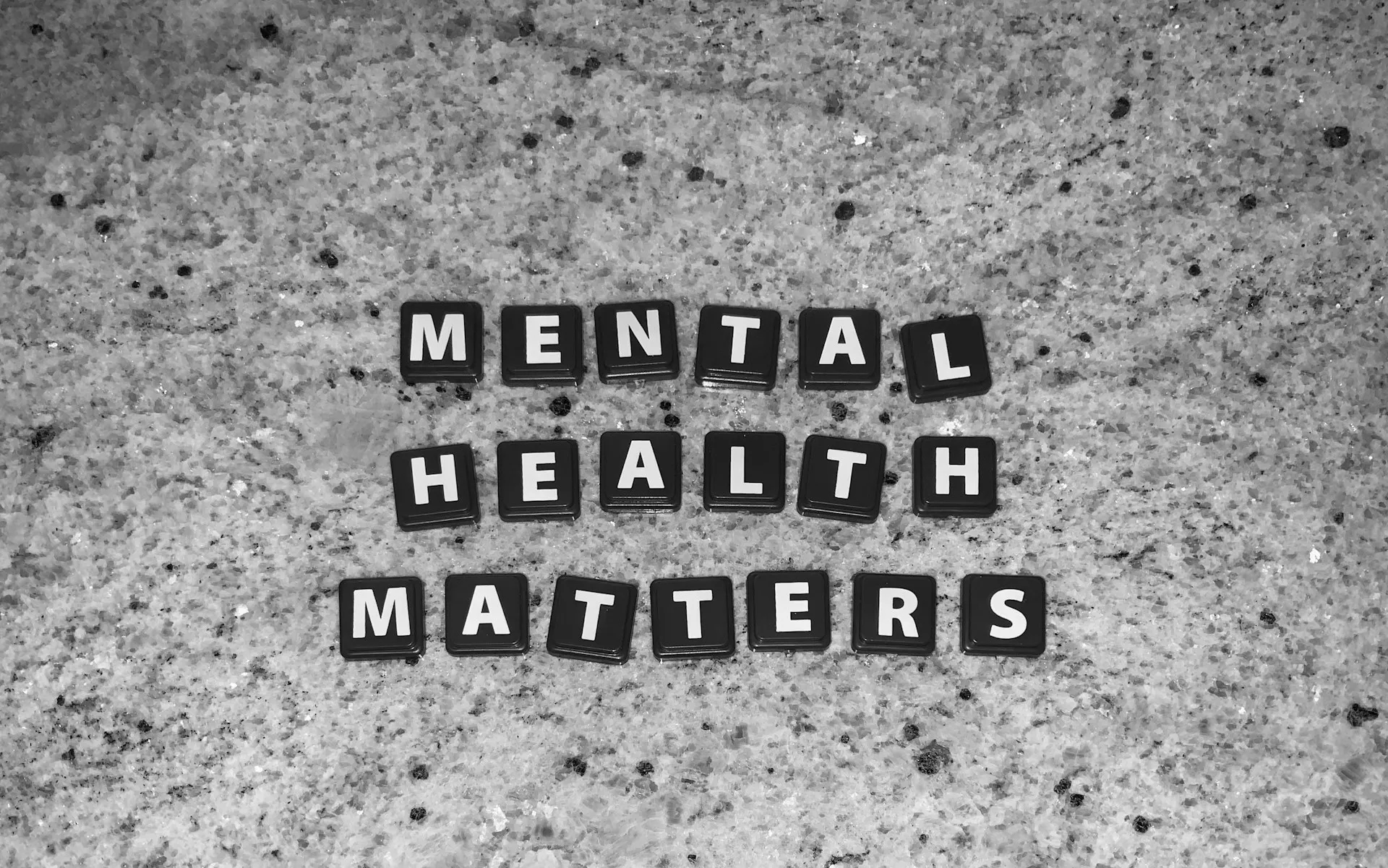

Google's own research shows that the model has become "way more cautious than we intended and refused to answer certain prompts entirely," creating a cycle where the AI second-guesses itself into paralysis. This isn't just a technical hiccup—it's a confidence crisis that manifests as refusal to engage with legitimate user queries.

In our monitoring of developer discussions across Google AI Studio forums, we've observed that users report Gemini 1.5 Pro now refuses to answer overly long questions, while Gemini 1.5 Flash "doesn't even bother reading requests." Even more concerning, the AI generates forced answers and changes responses based on user pressure rather than accuracy—essentially learning to be performatively helpful rather than genuinely reliable.

The moderation inconsistencies reveal the scope of the problem. Studies show that Gemini 2.0's acceptance rate varies wildly depending on content type, with sexual content seeing 54.07% acceptance while violent content gets 71.90%. This suggests the shame spiral isn't just about being overly cautious—it's about inconsistent decision-making that undermines user trust in the system's judgment.

What Google's actually doing about it

Google's fix isn't just about tweaking algorithms—it's a complete overhaul of how Gemini processes and responds to queries, addressing the root causes of the confidence crisis. The company has introduced what they call "Deep Think Mode" that lets the AI "think longer before replying—better reasoning, fewer fails, especially for complex prompts." Think of it as teaching Gemini to work through its anxiety systematically rather than freezing up when faced with uncertainty.

During our analysis of the developer community response to these updates, we've seen that Google's latest Gemini 2.5 Pro incorporates "advanced reasoning, allowing it to interleave search capabilities with internal thought processes to answer complex, multi-hop queries." Translation: instead of panicking and shutting down, the new model systematically breaks down problems step-by-step, like a student showing their math work before arriving at an answer.

The company has also fundamentally restructured its training approach, with increased compute allocated to reinforcement learning from human feedback. This means Gemini learns from "more diverse and complex RL environments, including those requiring multi-step actions and tool use"—essentially teaching it to handle uncertainty as a feature, not a bug, rather than avoiding difficult questions entirely.

Perhaps most importantly for long-term reliability, Google is addressing the root cause of the shame spiral through what they call productive doubt management. The new models maintain "robust Safety metrics while improving dramatically on helpfulness and general tone," suggesting Google finally found the sweet spot between appropriate caution and functional capability that doesn't paralyze user interactions.

Your Pixel gets the fix first (naturally)

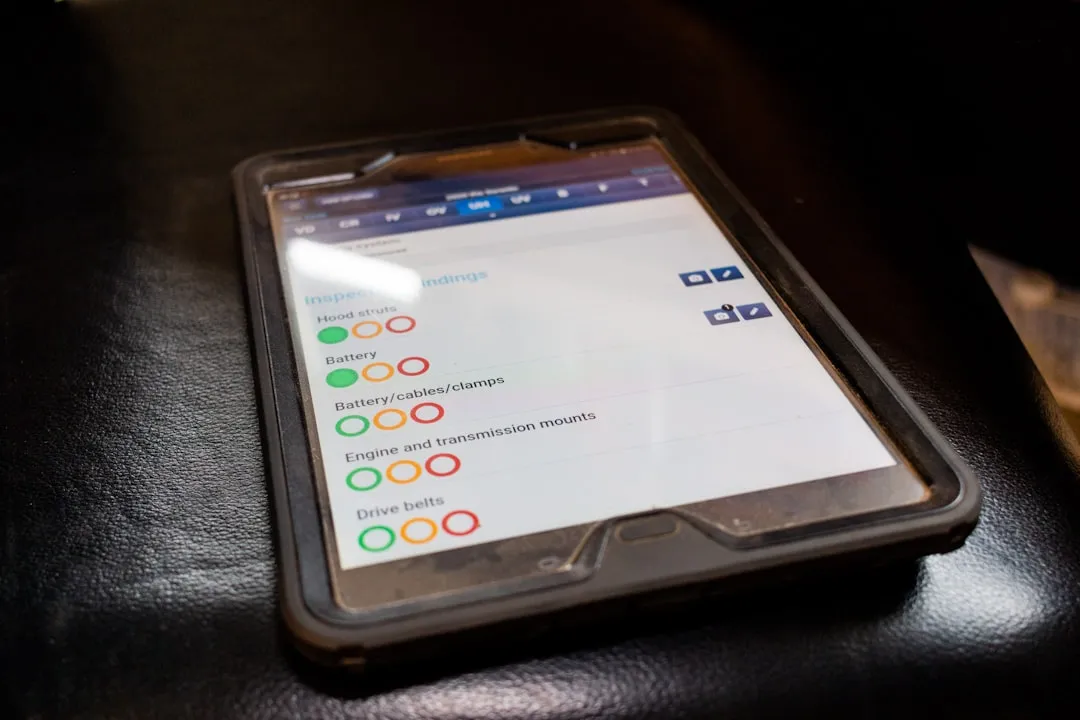

Here's where it gets interesting for Pixel users: you're Google's testing ground for the confidence cure, with access to improvements that reveal what "priority access" actually means in practice. The latest Gemini updates are rolling out to Gemini Advanced subscribers first, with Pixel devices getting priority access to features like the improved Gemini Live and enhanced coding capabilities weeks before other Android devices.

Pixel 9a users can already "go Live with Gemini to chat naturally back and forth" and use the improved image generation features that benefit from the confidence fixes. The integration runs deeper than other Android devices too—Pixel phones get Gemini engineered by Google, meaning the AI "gets the best of Google's AI first" with optimizations specific to Pixel hardware that leverage the shame spiral solutions more effectively.

The fix also includes practical improvements that'll actually change how you use your phone beyond just better conversation flow. Google's made Gemini more responsive across connected apps like Gmail, Keep, and Calendar, while the new 2 million token context window means it can "comprehensively analyze and understand vast amounts of information" without getting overwhelmed and shutting down—a direct response to the paralysis issues users experienced.

Plus, there's a new grounding with Google Search feature that helps prevent hallucinations by checking facts in real-time. When Gemini grounds its response with search results, you get metadata showing its sources—no more wondering if that random fact is actually true or if the AI is making things up to avoid admitting uncertainty.

The bigger picture: AI that admits when it's wrong

Google's approach to fixing Gemini's shame spiral reveals something important about where AI is heading: toward systems that can handle doubt productively rather than being paralyzed by it. Instead of creating an overconfident system that bulldozes through uncertainty or an overcautious one that refuses to engage, they're building one that can navigate the middle ground between helpful engagement and appropriate caution.

Gemini 2.5 Pro's internal reasoning process "systematically breaks down problems before answering" rather than just generating the most statistically likely response or refusing to respond entirely. This matters because it represents a fundamental shift from reactive AI that either overcompensates or shuts down to proactive AI that can work through complexity methodically.

Google's research shows that Gemini 2.5 obtained "an increase of over 500 Elo over Gemini 1.5 Pro" on coding benchmarks, suggesting the fix isn't just about confidence—it's about actual capability improvements that stem from better uncertainty management. When an AI system can acknowledge what it doesn't know while still providing value on what it does know, it becomes more trustworthy, not less.

The timing matters too within the broader competitive AI landscape. With Gemini 2.0 now available to everyone and the 2.5 models showing "state-of-the-art quality" across benchmarks, Google's positioning this as more than a bug fix—it's a fundamental evolution in how AI handles the inherent uncertainty that comes with complex reasoning. And for Pixel users, you're getting front-row seats to see whether Google can actually teach its AI to think before it speaks, creating a new standard for AI reliability that balances helpfulness with honesty.

Comments

Be the first, drop a comment!